Google’s long-awaited Performance Max Channel Report is finally here! Well. Almost. Currently in beta and rolling out to a limited set of advertisers, this new feature marks a major step forward in bringing much-needed transparency to PMax. For years, advertisers have been asking: Where’s my budget going?Now, there’s finally an…

Ecommerce growth strategies

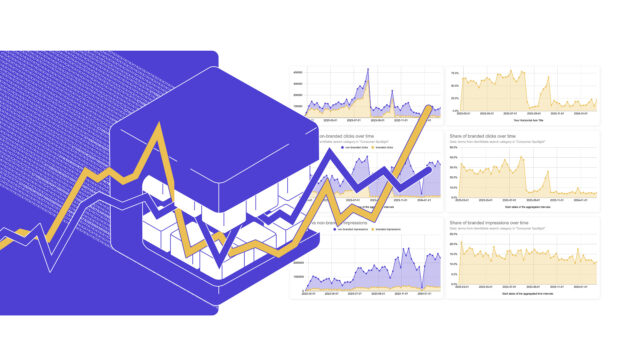

State of Performance Max 2025: Insights from 4,000+ campaigns

Believe it or not, it has been three years since Google started the wide-scale rollout of Performance Max campaigns. Time flies when you're having fun…

PMax in 2025: The ultimate campaign optimization guide

What? Another “How to PMax” article? Yes, but this one's the most comprehensive you'll read yet!In 2024 alone, we’ve set up over 700 campaigns for…

Wake up: Your ROAS addiction won’t make you profitable!

Here is a harsh truth: Too many advertisers are so addicted to ROAS that they completely ignore tactics that will actually make their business more…

AI limitations in PPC: The human touch in Performance Max

Let’s talk about the elephant in the room: With the rise of PMax’s AI-driven advertising, what are the chances of technology replacing the human marketer? …

Multi-dimensional Product Segmentation: Road to PMax success

The tides in digital advertising are shifting. Highly automated, all-encompassing digital campaign types, like Google and Microsoft’s Performance Max (PMax), ring in a new era…

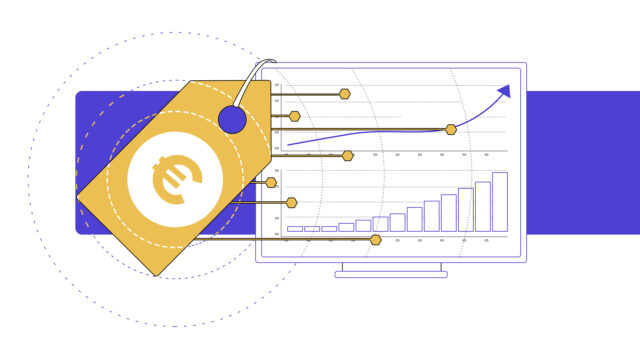

How pricing influences conversion rates: A case study

In 2024, the global economy will face unprecedented challenges and transformations. Inflationary pressures and fluctuating market conditions shape consumer spending habits worldwide. Price sensitivity has…

Performance Max Q&A: Real answers to your biggest PMax headaches

It’s been three years of Performance Max. Three years of change, improvement, but also frustration. In our recent State of PMax webinar, paid search pros…

Black Friday Google Ads Q&A: Expert insights for higher profits

With the most competitive holiday season yet fast approaching, it’s more important than ever to fine-tune your Black Friday Google Ads campaign strategy, budget allocation,…